Understanding Data Ownership with AI Vendors

- Cameron Duncan

- Apr 28, 2025

- 4 min read

Introduction

Artificial intelligence is transforming the way organizations operate, and much of this innovation is powered by APIs (application programming interfaces) that connect businesses to advanced AI models. However, as companies rush to integrate these capabilities, one critical issue is often overlooked: data ownership.

An API is a set of rules that allow different software applications to communicate with each other. But not all API integrations are created equal - especially when it comes to data rights and privacy. Understanding where your data goes, who can access it, and how it might be used is essential for protecting your intellectual property and maintaining your competitive edge.

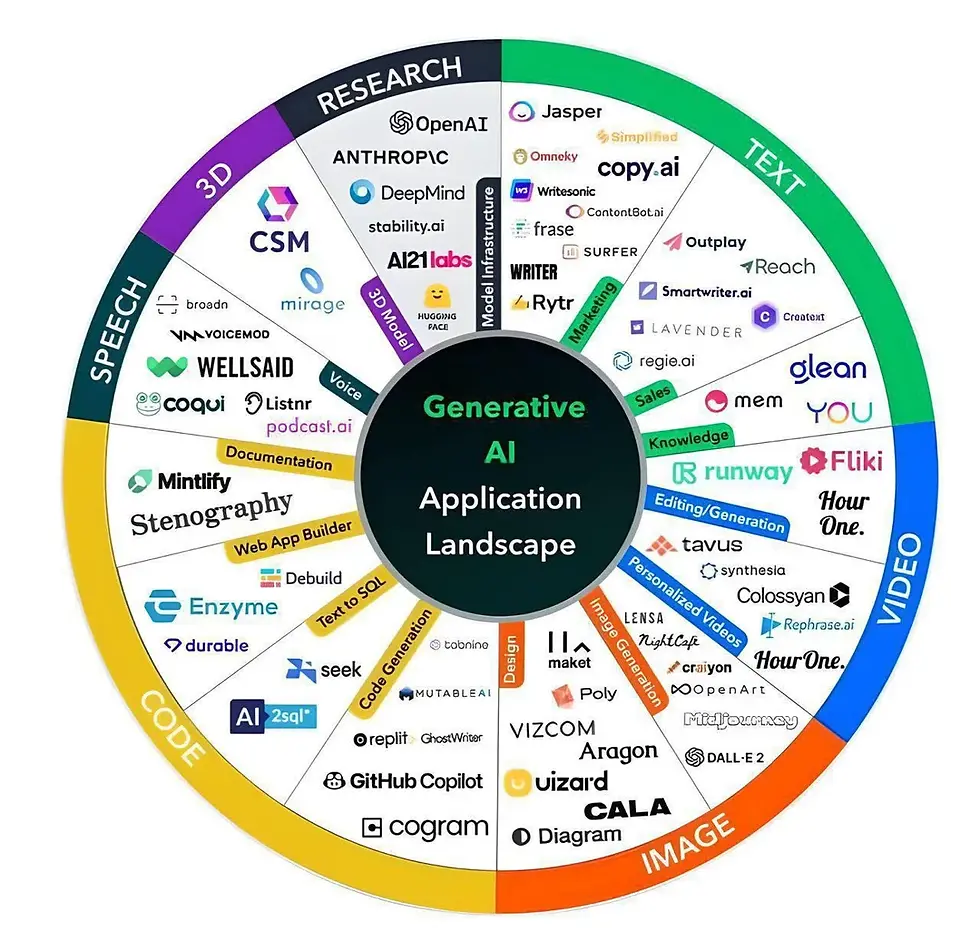

The Landscape of AI API Providers

The AI ecosystem is rapidly expanding, with a variety of providers offering access to powerful language models and other AI tools. Broadly, these providers fall into two categories:

Direct AI Model Vendors: Companies like OpenAI, Anthropic, and others, who provide direct access to their proprietary models via API.

Major Cloud Providers: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud. These companies offer AI APIs as part of their broader cloud platforms.

The distinction between these two categories is more than just branding - it has real implications for how your data is handled. When you use an AI API through a major cloud provider, you benefit from their established infrastructure, compliance standards, and, crucially, their contractual commitments regarding data privacy. In contrast, direct API integrations with AI vendors may be governed by very different terms, often granting the vendor broader rights to use your data.

What Happens to Your Data?

When you send data to an AI model via an API, that data is typically transmitted in small, encrypted chunks. These API requests are transient - they move from your server to the provider’s server using secure protocols (like SSL), ensuring that the data cannot be read in transit. Once the AI model processes your request, the response is sent back, also encrypted.

But what happens to your data after that? Here’s where the terms and conditions matter:

Direct AI Vendor Terms:

Many direct AI vendors (such as OpenAI, Groq, and others) reserve the right to use your API requests and responses for model training or improvement. While they may grant you a royalty-free license to use the output, they often retain broad rights to your input data. These terms can change frequently, making it difficult to ensure ongoing compliance or to know exactly how your data is being used.

Cloud Providers’ Customer Copyright Commitments:

AWS, Azure, and Google have clear contractual language stating that any data you send through their APIs remains your property. They commit not to use your data for model training or any other purpose beyond fulfilling your request. If they were to violate this, you would have legal recourse.

The risk is clear: if you’re not careful, your proprietary information could end up being used to train someone else’s AI model - potentially eroding your competitive advantage.

The Importance of Data Ownership and Control

For modern businesses, data is more than just a resource - it’s a core asset and a source of competitive differentiation.

Retaining ownership and control over your data is critical for several reasons:

Intellectual Property Protection:

Your data, processes, and knowledge base are what set you apart. If this information is used to train third-party models, you risk losing your unique edge.

Regulatory Compliance:

Many industries are subject to strict data privacy and security regulations. Losing control over where your data goes or how it’s used can expose you to legal and financial risks.

Client Data:

Many companies have NDAs with their clients. Exposing client data to unsecure AI models, without the client's consent, could violate written agreements and sour relationships with customers.

In short, giving up control over your data can have far-reaching consequences—some of which may not become apparent until it’s too late.

Best Practices for Secure AI API Integration

To protect your data and your business, consider the following best practices:

Choose Cloud Providers with Strong Commitments:

Whenever possible, use AI APIs from major cloud providers that offer explicit customer copyright commitments. This ensures your data remains your property and is not used for model training.

Control Your API Keys and Instances:

Architect your solutions so that you, not your vendor, control the API keys and cloud instances. This limits third-party access and gives you greater oversight.

Review Terms and Conditions Regularly:

The landscape is constantly evolving. Make it a habit to review your providers’ terms of service and privacy policies to ensure they still align with your requirements.

Limit Sensitive Data Exposure:

Be mindful of what data you send to external APIs. For highly sensitive or proprietary information, consider on-premises solutions or private cloud deployments.

Conclusion

As AI becomes more deeply embedded in business operations, understanding data flows and ownership is no longer optional - it’s essential. Not all API integrations are created equal, and the wrong choice can put your intellectual property, compliance posture, and competitive advantage at risk. Organizations should demand transparency and control from their AI vendors.

If you’re unsure about your current AI API strategy or want to ensure your data is protected, contact Hallian Technologies for a consultation. We’ll help you navigate the complexities of AI integration—so you can innovate with confidence.